This was a joint effort by Dan McKay and I, with him driving the project from After Effects. My part lay mostly on populating the screens with footage. But there was a particular problem; the clients did not/could not sign off on all the footage, and knowing that in advance, I thought of making the footage generation procedural, so that Dan could render his bits and I could create the screen footage separately, thus working in parallel.

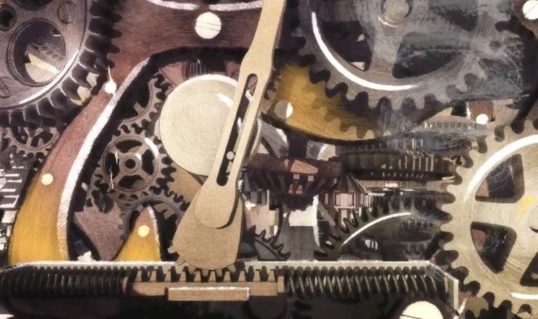

The main technique was UV mapping (STMapping in Nuke) plus floating-point ID mattes. It wasn’t enough to use UV mapping because even though I could easily map a footage on a screen, there were hundreds of screens that needed semi-random footage running on them. Using RGB mattes were out of the question since I would end up, still, with too many mattes to manage. I decided that I needed to mark these screens by ID, and so approached it a numbers-based AOV render.

This was done by first UV mapping all the screens into one UV map, then create two ramps (one U and the other V) with gradients and a precise multiply calculations which enabled colour ranges way past 1.0. The idea is that the first screen would have the surface value of 0, and another will be 1.0, and the next 2.0, and so on. When rendered from Maya as an .exr, every screen looks white, but when colour-picked in Nuke, floating-point values are recognised.

In Nuke, I created an setup which took any number of footage variations, and randomly assigned them to ID mattes, which were subsequently piped into STMaps. The result is that I had a ‘rig’ which I could switch any footage for another, replace any one screen with a particular footage if I wanted to, and/or change the randomisation of generic screens at any time.

It was a technical challenge that I found satisfying, and all the more so because the client did the predictable thing and started change stuff around. But we were ready.

Watching the video, one would never think the lengths of which artists go through to account for things that seems out of scope of a commercial. Most people just think about colours, sound, motion, effects, and all the stuff that’s in front of them; but as cg artists, we have to think about the framework behind all that in order to accommodate eventualities known client feedback.